Blog article

How Google Crawls Your Website and Indexes Your Content

Estimated reading time: 6 minutes

You need to understand how Google crawls and indexes your e-commerce website (and content), so you can fix every issue that might obstruct the Google web crawl process.

While many website owners understand (and implement) on-page and off-page SEO strategies, only a few pay attention to technical SEO—even though it also impacts websites’ SEO health.

In this guide, you’ll discover how Google crawls and indexes web pages and tactics you could utilize to improve your website’s technical SEO and search rankings in the search engine results page (SERP).

What is search engine crawling, and how does Google crawl a website?

Google strives to provide the most relevant and recent content to searchers. So, Googlebot (the search engine’s bot) crawls websites to dig up helpful content that will display when people search on Google.

You can think of Google website crawling as the scouting of web pages (by Googlebot) to find new or updated content to index. Indexed content could be videos, images, blog posts, and PDFs.

Crawling begins with the discovery of a web page. After Googlebot finds a web page, it follows other links on the page to uncover new content that it also needs to crawl.

Three reasons Google isn’t crawling your website

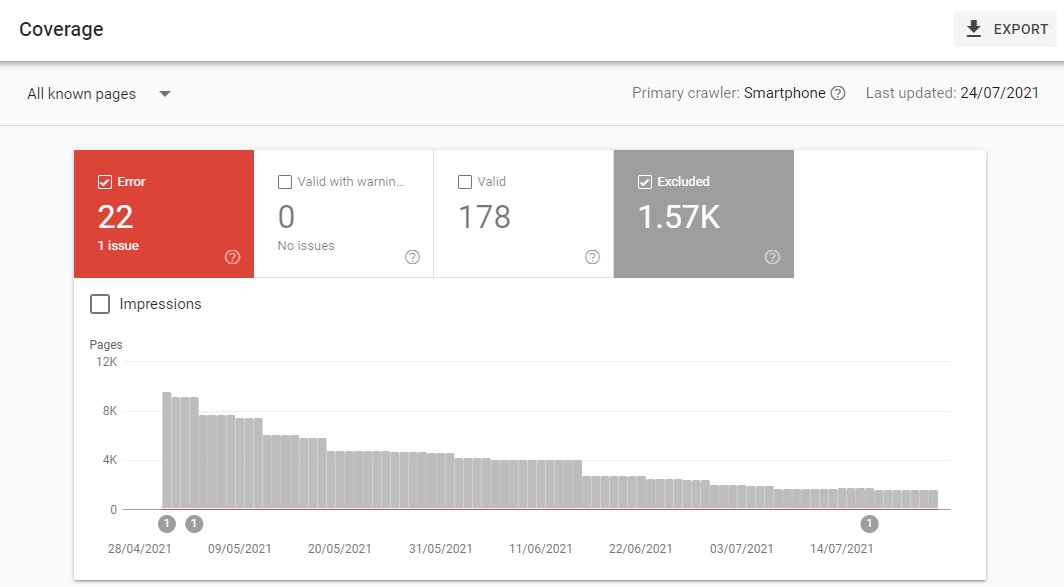

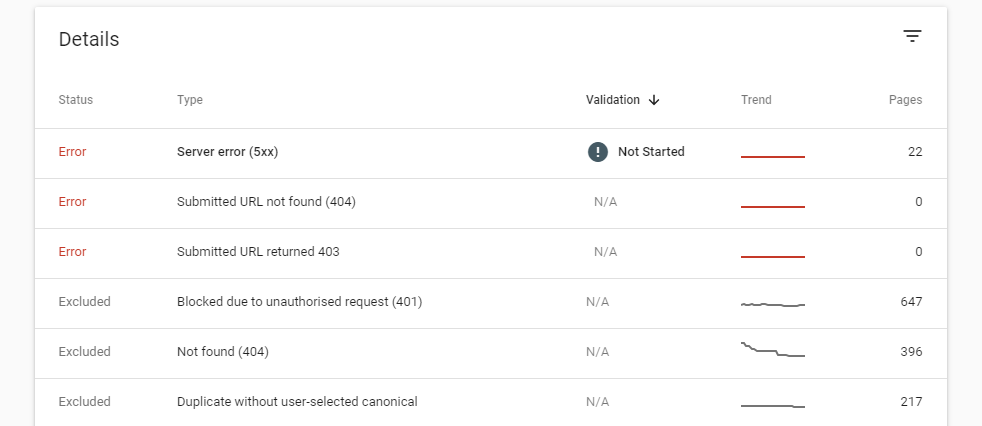

When some essential pages on your e-commerce website aren’t showing up on Google, it’s most likely that Googlebot is struggling to crawl your web pages.

Below are three possible reasons why Google isn’t crawling your website:

Your website has several missing pages

Your customers aren’t the only ones who become frustrated when they land on a missing page. Search bots also expect to find active pages (and not broken links) when they crawl your website.

It’s not uncommon to land on 404 error pages when browsing an e-commerce website. Pages that often return 404 errors include:

- Seasonal deals pages (like a Black Friday deal page)

- Pages of products that are out of stock

- Pages of products that are no longer on sale

You’ll have to fix missing pages on your website to ensure that Google properly crawls it. There are specialized tools for digging up missing pages on a website. However, you can also do this on Google Search Console (GSC).

After listing every page that returns a 404 error, set up a 301 redirect to make these pages redirect automatically to active web pages—and enhance the Google web crawl process.

2. There are issues with your website’s sitemap

Sitemaps help search bots understand your website’s structure and identify the most important pages on your website.

So, Google’s inability to crawl your web pages could result from an issue with your sitemap.

Some hosting providers automatically generate sitemaps for websites. However, you can use an XML sitemap generator to create one for your website if you have none.

Once you have your sitemap (as an XML file), submit it via GSC.

3. Your website’s robots.txt files aren’t configured properly

Robots.txt files tell search bots which web pages they should and shouldn’t crawl.

Google crawls all the pages on a website when it finds no robots.txt file. However, when there’s a robots.txt file on your website, search bots will have to follow the instructions the file contains.

So, modify the file and change every command disallowing Googlebot from accessing your important web pages. You can view your website’s robot.txt file by typing “yourwebsite.com/robots.txt” into your browser address bar.

What is search engine indexing?

After Googlebot detects relevant content on your website, it proceeds to store the information in an index. Your web pages will show up on Google as search results only after indexing.

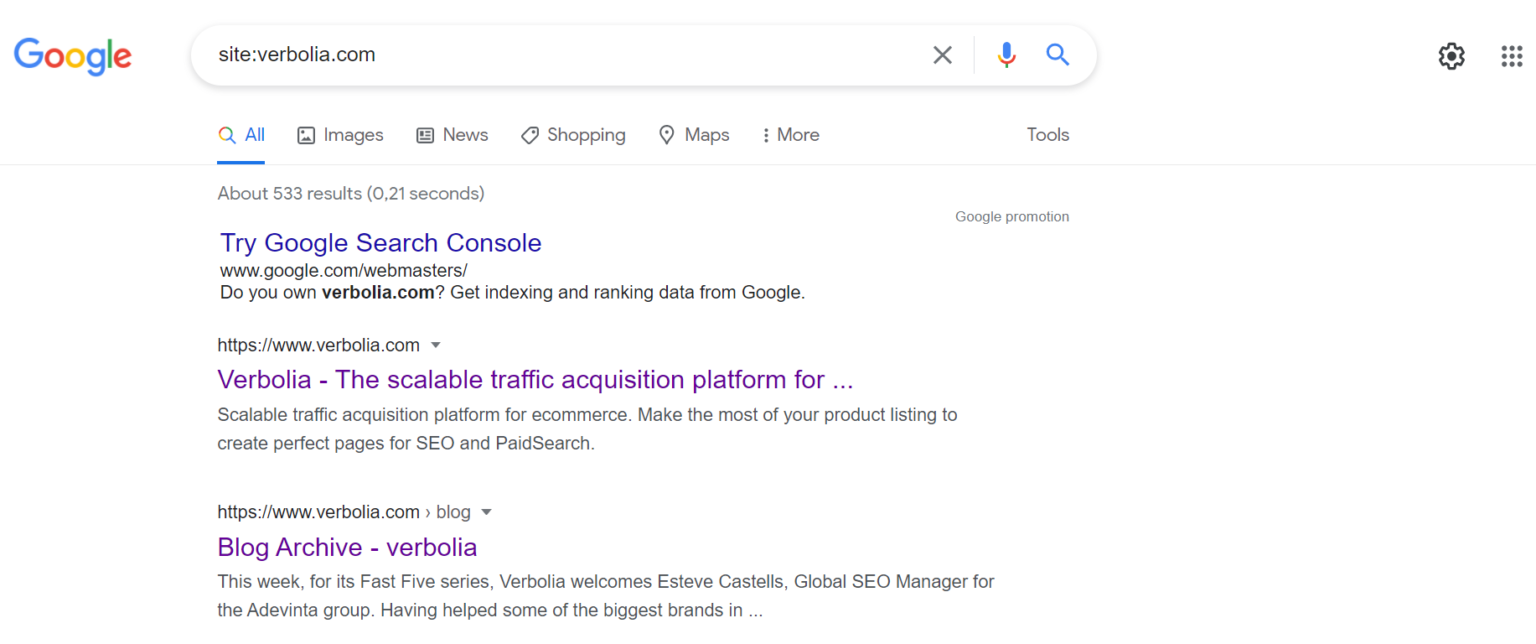

You can check if Google has indexed your website by typing “site:yourwebsite.com” into the Google search bar. You can also use “site:yourwebsite.com/url-slug” to check if a specific page is in the Google index.

Three foolproof ways to make Google index your web pages fast

Your content won’t show up in the SERP if Google doesn’t index it.

Have you noticed that Google indexes your content slowly or isn’t indexing your website at all? Here are three strategies you could implement:

1. Modify your website’s robots.txt file

There might be crawl blocks in your robots.txt hindering Google from indexing certain web pages on your website.

Worst of all, your robots.txt file could be disallowing Google from crawling (and indexing) all the pages of your websites. So, check your robots.txt file for any error-generating commands and make necessary modifications.

2. Weed out all less-important pages

You’ll end up exhausting your crawl budget when your e-commerce website has numerous unnecessary pages.

So, investigate the pages on your e-commerce website and remove irrelevant ones. Afterward, optimize your important pages for search engines or use Verbolia to create stylish, keyword-rich pages that’ll drive targeted organic traffic to your website.

3. Build high-quality backlinks

Backlinks, an important Google ranking factor, helps Google gauge how authoritative and credible your website is. So, building high-quality backlinks will improve the speed at which Google indexes your content.

Build web pages Google crawlers can’t ignore

Your SEO efforts will pay off only when your web pages eventually rank at the top of SERPs. So, it’s not enough for Google to crawl and index your content. Also ensure you execute strategies that’ll make your content show up in Google’s top results.

Do you want to improve your website’s rankings by creating unique web pages optimized for search engines? Do not hesitate to request a free demo.

About The Author

How can Verbolia help your e-commerce platform.