Blog article

Is my eCommerce website optimized for search engines bots?

Estimated reading time: 5 minutes

There are plenty of ways to drive traffic to your store—but ranking high in the search engines is among the most powerful.

A high ranking brings you buyer-ready traffic and instantly confers customer trust upon you, leading to huge revenue opportunities.

But how do you know if your eCommerce website is optimized for the search engine bots? Let’s explore how to find out (and further optimize) in this post.

How does Google find and rank pages?

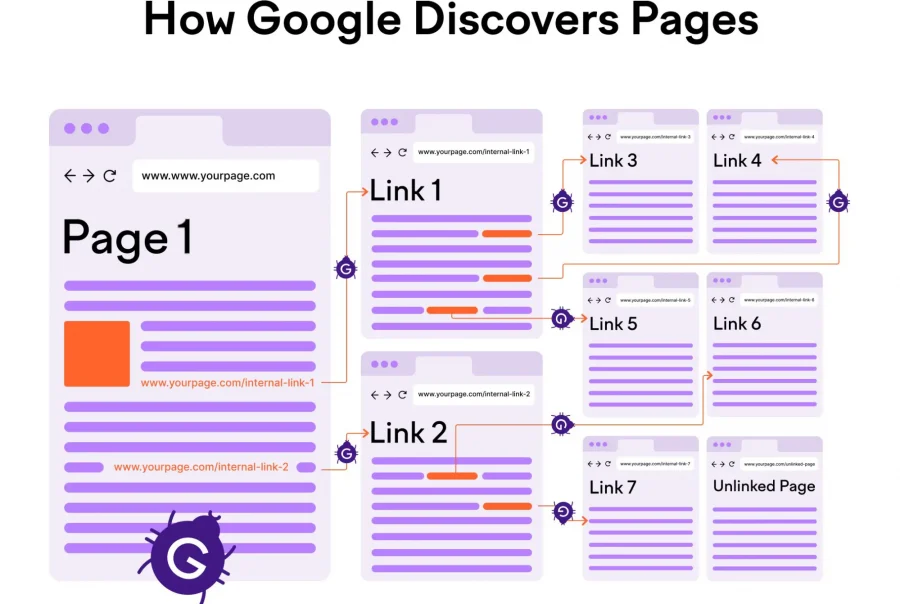

Google and other search engines send out search engine spiders—little online bots—to find and rank pages. These bots download a copy of each web page, then analyze everything on it from the content to the links and more.

Whenever one of these bots finds a link to a new page, it’ll add that to its “to-crawl” list, so to speak.

How to check if Google indexed your pages

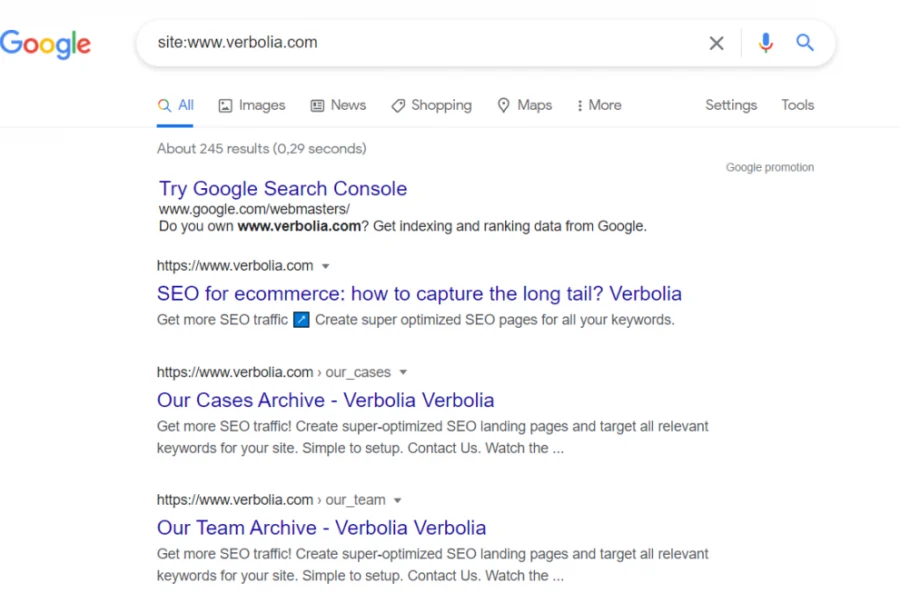

To see if Google indexed your website, head to Google and search for site:yourwebsite.com.

The “About X# results” tells you how many pages on your site have been indexed so far.

You can also do this for individual pages. Simply add the slug of that specific page’s site onto the end of the site:yourwebsite.com and search.

If you get a result, the page is indexed. Otherwise, it isn’t.

Ways to optimize your store for search bots

If you find that your ecommerce website isn’t optimized for search engine bots, not to worry. There are a few things you can do.

1. Fix up your robots.txt file

The robots.txt file is part of your website’s code that tells search engine bots where they are and aren’t allowed to crawl. Some use this to prevent overloading their website with requests.

However, you may accidentally be blocking search bots from crawling and indexing your web page.

To fix it sitewide, visit yoursite.com/robots.txt and look for the following code:

1 User-agent: Googlebot

2 Disallow: /

1 User-agent: *

2 Disallow: /

You may be able to tell, but this code essentially tells the bots not to crawl your site. Delete the code, and you’re good to go.

Individual pages may be blocked, too. You can check any page by pasting it into the Google Search Console’s URL inspection tool, then clicking on the Coverage block. If it says “Crawl allowed? No: blocked by robots.txt,” you’ll have to recheck your robots.txt for more disallows and delete them.

2. Check your sitemap

Sitemaps are essentially your website’s blueprints. They make it easier for search engines to crawl any page within the sitemap.

Google can find pages that aren’t in your sitemap, but there’s no drawback to including them in there, and you’ll increase the chance/speed at which your page is indexed.

Head back to Google Search Console’s URL inspection tool to see if a page is in your sitemap. Paste in the URL. If you get the following errors, the page needs to be added to your sitemap:

- “URL is not on Google”

- “Sitemap: N/A”

3. Cut out low-quality pages

Google has a limited amount of server resources. Thus, every site gets a “crawl budget,” meaning it can’t necessarily crawl every page of yours.

That means you should trim any low-quality pages.

Google says that if you have under a few thousand URLs, crawl budget isn’t a big worry. Still, it never hurts to clean up your website’s pages every so often. You’ll optimize crawl budget and make sure your customers spend as little time as possible on pages that won’t lead to sales.

4. Add internal links whenever possible and relevant

Internal links are a key component of SEO because they show search engines how your site is structured. You can also use them to your advantage to get your pages indexed faster.

All you do is link wherever possible from your best-performing pages. Google is most likely to recrawl your larger pages, cutting the time it takes to get those new pages ranked.

While internal linking, make sure to fix any internal links with “nofollow.” The nofollow tells Google not to crawl the page, which doesn’t help you out.

5. Get backlinks

Backlinks are the other side of the link-building coin. If your store has several links pointing to it, Google’s going to think it has valuable content on it.

Backlinks aren’t mandatory, but they both speed up indexing and give you an SEO boost if they come from authoritative sources.

Stop worrying about optimization and get back to business

Some of these tips get into the SEO weeds. You’re in business to sell your products, not figure out technical SEO matters—which is why you should get Verbolia.

Verbolia handles many of these for you by creating lightning-fast, highly optimized product pages using a few keywords you provide. Plus, you can monitor crawling data and the indexation status of your pages to make sure everything’s ranking how it should.

Interested? Schedule a free demo to learn more.

About The Author

How can Verbolia help your e-commerce platform.