The top risks of implementing a programmatic SEO strategy

Estimated reading time: 4 minutes

Programmatic SEO sounds like a dream — publish thousands (or even millions) of pages in no time, automate long-tail targeting, and scale your organic traffic like never before.

But here’s the catch: if you don’t approach it carefully, it can do more harm than good.

Whether you’re selling thousands of products or operating in a content-rich vertical, programmatic SEO can absolutely be a growth driver. But before you start spinning up URLs at scale, there are two major risks you need to understand:

- Going too fast,

- Compromising on quality.

Let’s break it down!

Programmatic SEO is all about scale — but dropping a million new URLs onto your site overnight? That’s a red flag.

Google allocates a crawl budget to every domain — essentially, how much time and effort it’s willing to spend crawling your site. If your site structure suddenly explodes with tens of thousands of new pages, Google can’t (and won’t) crawl them all immediately. Instead, it may:

- Ignore some of your new pages entirely

- Slow down crawl rate overall

- Delay indexing for your most important content

The result? You waste time, server resources, and potentially confuse search engines about what matters most.

What to do instead

Take a gradual approach. Start small — maybe a few thousand pages depending on your current site size — and monitor how Google crawls and indexes them. Once you see healthy behavior, scale gradually.

Risk #2: Poor or duplicate content at scale

Automation doesn’t mean cutting corners.

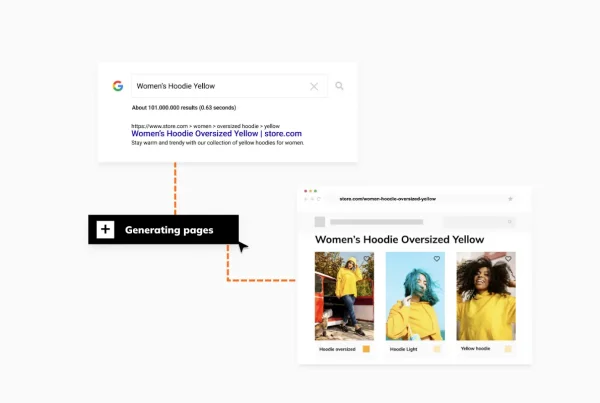

One of the biggest risks with programmatic SEO is publishing thin, duplicated, or low-quality content — especially when pages are generated without thoughtful keyword targeting or value to the user.

If your pages don’t meet a quality threshold, Google may:

- Consider them duplicate or near-duplicate

- Fail to index them

- Or worse: treat your site as spammy

And we all know how hard it is to recover from that reputation in SEO.

What to do instead

Focus on intent and quality. Every programmatically generated page should:

- Target a specific, relevant long-tail keyword

- Have unique and helpful content (even if it’s templated)

- Be useful to real users — not just bots

Don’t generate pages for the sake of it. Generate value.

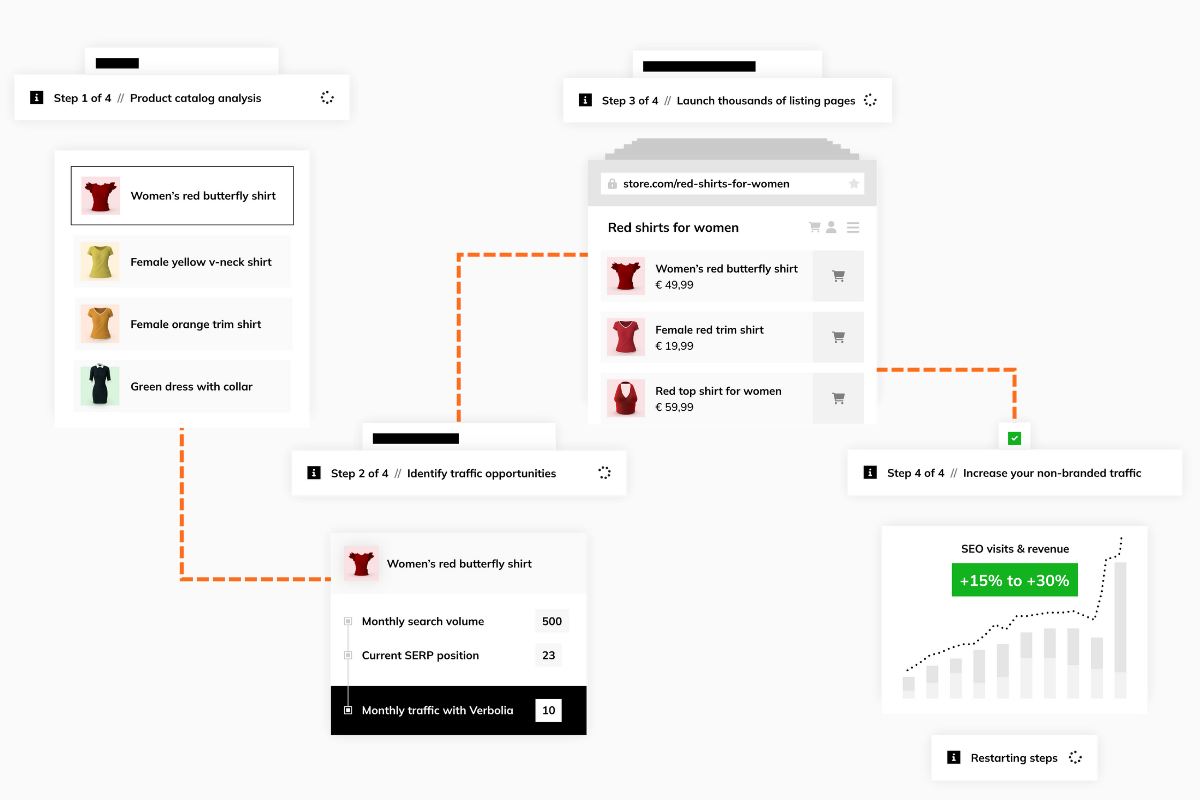

Real example: How Decathlon ensures quality at scale

Decathlon recently shared how they avoid poor or duplicate content while scaling SEO with Verbolia’s Vpages. One key tactic? They set a minimum of four products per listing page before allowing it to go live — a simple but effective way to prevent thin content.

On top of that, their team manually reviewed all automatically generated pages to ensure relevance and quality. It’s a great reminder that even with automation, human oversight still plays a crucial role in building SEO pages that perform.

Programmatic SEO can work if you respect the risks

Programmatic SEO is powerful, but it’s not plug-and-play. You need to:

- Respect your site’s crawl budget

- Avoid flooding your domain overnight

- Keep quality at the core of your strategy

Do it right, and you’ll build a scalable, long-tail SEO engine that delivers consistent traffic. Do it wrong, and you could hurt your rankings — or worse, never get indexed in the first place.

Looking to scale SEO with automation without the risks? That’s exactly what Verbolia’s Vpages is built for!

About The Author

How can Verbolia help your e-commerce platform.